NOTE: As of 2020-06-22, I have a new NAS build. You can read about it here.

For about the last seven years (since 2012), I’ve been using a Synology NAS (Network Attached Storage) device in my house as a central repository for files, photos, music, and movies. It has generally worked well. However, there have been a number of serious problems with the Synology NAS I bought (DS413J). First, the amount of RAM is limited and cannot be upgraded. Second, the CPU (MARVELL Kirkwood, Arm processor) is in the same situation. While the box is small and draws very little power, the inability to upgrade the hardware (other than hard drives), means that I’m basically stuck with what was considered cutting edge back in 2012 when I bought it. In practical terms, these limitations have meant that I have not been able to run Crashplan on my Synology box since the first year I bought it because I have more than a terabyte of files I am backing up and the 512 mb of memory cannot handle that (I created a workaround where I run Crashplan on a different computer, but am backing up the files on the Synology box over the network). It also means that I haven’t been able to run the latest version of Plex for the last 4 years because Plex stopped supporting the CPU in my Synology box. This eventually came to a head about a month ago when the latest Plex client on my Roku stopped working with the very outdated version of Plex server on my Synology NAS device. As a result, I was no longer able to serve videos to my Roku, which was one of the primary reasons why I even have a NAS in the house.

The convenience of having a pre-built NAS with a web interface has been nice. There is a lot to like about Synology products. However, you are locked into their hardware and their software and are restricted by their timelines for upgrading to the latest software. Additionally, my Synology NAS, which is a 4-bay device, has a problem with one of the bays that actually ended up destroying 2 hard drives, so I only have 3 usable hard drive bays. And, Synology devices are crazy expensive. Given my use scenario, paying as much as they now charge for a high-end NAS that might temporarily meet my needs doesn’t make a lot of sense.

So, I finally decided that it’s time to go back to building my own NAS (I had one for a short while before). As I started researching what I wanted for my Do It Yourself (DIY) NAS, I basically went down a rabbit hole of options: Which Operating System (OS)? What hard drives? What file system for

DIY NAS: OS (Operating System) Options

As a long-time Linux user, I was never going to consider anything but a Unix-based system. That means I never even considered Windows as an option. Those who use Windows could certainly consider it, but I have no interest in using Windows for my NAS. That, however, didn’t narrow my options that much. With Unix-based systems, all of the following are real contenders: Unraid, FreeNAS, Amahi, Open Media Vault,

Unraid (which isn’t free) will really allow you to install an OS on top of their OS, so that might not be a problem. But I’m also not convinced that I need Unraid for drive management (as I’ll detail below when I discuss how I organized my hard drives).

There very well may be advantages to one of these specific OSes for NAS devices that I am missing. But, after having suffered under the proprietary lock-in and inability to upgrade my software under Synology, I realized that I was very wary of getting locked into a pre-packaged OS that would mean I couldn’t install what I want to install. Ultimately, I decided to just install Kubuntu 18.04 on what would become my new, DIY NAS box. Some might note that I should have just gone with Ubuntu server, as it reduces the amount of CPU power and memory since I wouldn’t have a graphical front end (KDE). I considered it. But that would also mean that I would have to manage the entire device through the command line or try to find some other software (e.g.,

Final choice on OS: Kubuntu 18.04, which is a long-term support release (important for future upgrades to the OS).

DIY NAS: Hardware Options (CPU, motherboard, RAM, etc.)

When Plex stopped working with our Roku, my wife quickly realized it. We all use Plex on a regular basis to watch our movie and TV collection. When I told her what the problem was and said that I thought I was going to need to replace our NAS, she asked me how much it was going to be. Being honest, I told her it could be fairly expensive, depending on what I decided to build. Luckily, we are in a position financially where I could spend upwards of a $1,000 on a new NAS if I needed to and she said that would be fine.

I spent quite a bit of time consider hardware. The biggest question was really whether I wanted to go with dual Xeon processors to build out a real server or go with what I am most familiar, AMD CPUs like Ryzen (I build all my own desktop computers). The dual Xeon approach made a lot of sense as they can manage a lot of concurrent threads. The latest processors from AMD (and Intel, but I’m an AMD guy), Threadripper, also manage a lot of simultaneous processors. However, these are all pretty expensive processors, even somewhat older Xeon’s can be a little pricey if I’m trying to get something that was made in the last few years. Additionally, the motherboards that go with these can be almost as expensive. I debated these options for quite a while.

Then, I had an idea. I had an older computer lying around from my latest upgrade (I usually upgrade my desktop, give my immediate past desktop to my wife; during the last one, I ended up upgrading the entire device, case included, which left a third desktop computer sitting unused). I wanted to test out Plex and Crashplan on that computer just to make sure it worked. It’s an older AMD Athlon II X4 620 that has 24

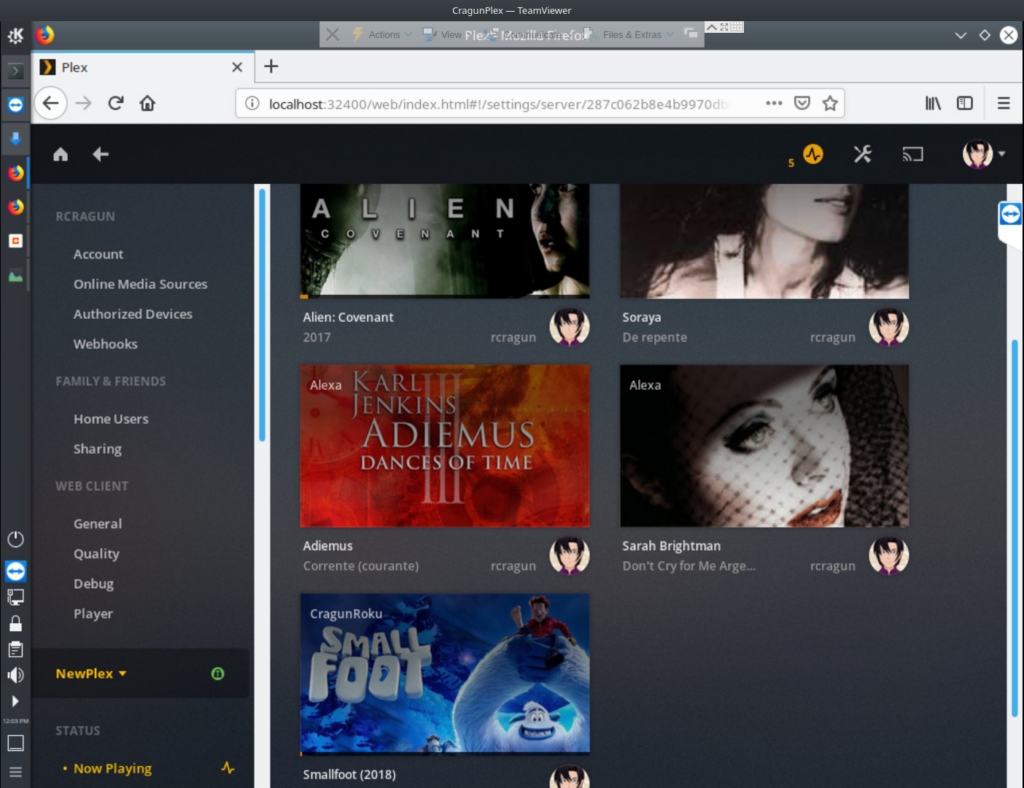

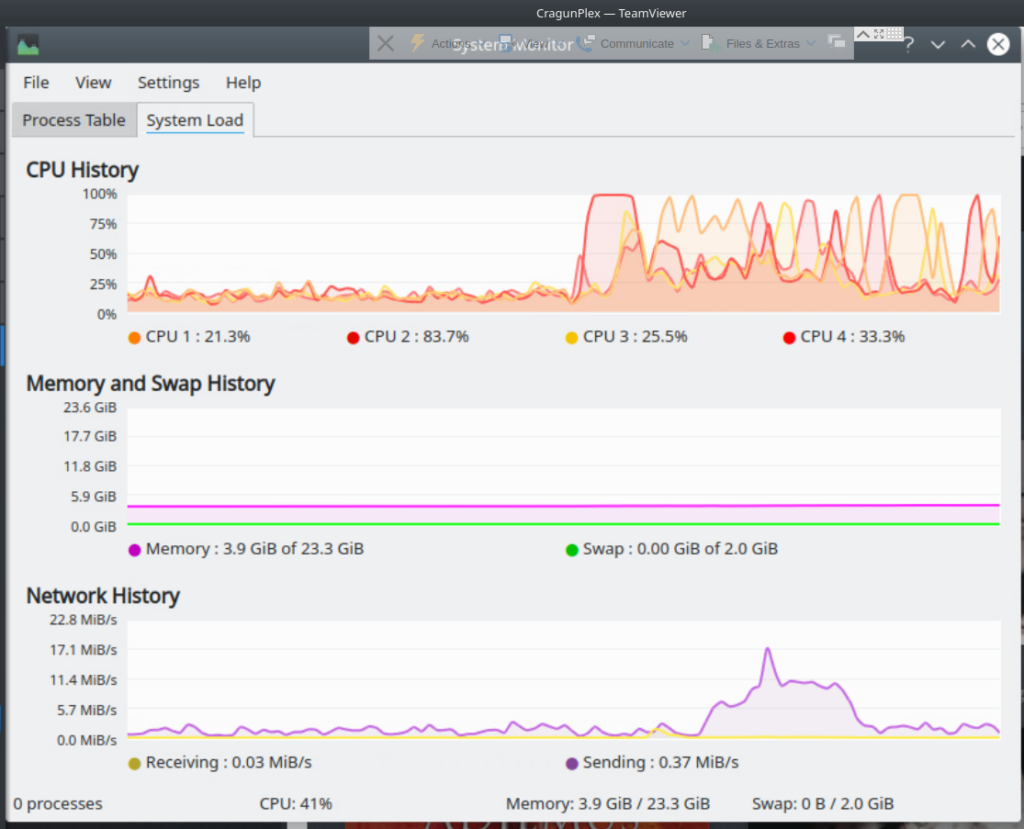

Once I got everything set up (see below for details), I wanted to see just how much my NAS could handle, especially since the old Synology NAS struggled with streaming just a single HD stream. To run my

The big question was whether my 4 cores could handle this. Here’s how things looked:

Conclusion: My older AMD Athlon X4 620 was more than up to the task. With two simultaneous 1080p video streams and three mp3 streams, the server was working, but it was far from maxing out. Since we have just one TV in the house, the odds of us ever needing to simultaneously stream more than two videos are almost zero, and that’s true even considering that I am allowing some of my siblings access to my Plex server.

What does this mean for the average person building a DIY NAS? Unless you have, let’s say, 10 4k TVs in your house and you want to simultaneously stream 4k videos to all of them, you probably don’t need the latest and greatest CPU in your NAS. In all likelihood, I’ll let the system I have run for a year or two (unless there is a problem), and then upgrade my box, my power supply, my

Where you probably do not want to skimp is on RAM. As I have been transferring my files and media to my new NAS from the old one, I have seen my RAM usage go up to as high as about 16 gb at different times. That has been the result of large bandwidth file transfers, Plex indexing the media files (video and audio), and simultaneous streaming of files. In short, you can go with an older, not crazy expensive, multi-core CPU for your NAS and be fine, but make sure you’ve got at least 16 gb of RAM, maybe more.

DIY NAS: Hard Drive Options

Where I got the most bogged down in my research was in deciding on how many hard drives to use, what file system to use, and whether or not I should use a RAID for the drives. In my Synology box, I had just two 4 terabyte hard drives in a RAID 1 (or mirror) arrangement. While I am getting close to filling up the 4 terabytes (I have about 3 terabytes of photos, movies, files, music), I was more concerned with not losing data. With a RAID 1, all of my data was backed up between the two hard drives. Thus, if I lost a drive, I would still have a copy of all of the data.

Additionally, I pay for unlimited storage through Crashplan. Everything on my NAS is backed up off-site. This way, I have a local copy of all of my files (the mirrored drive) and an off-site copy of all my files (in case there is a fire or catastrophic failure). Since I do back up everything off-site, I could theoretically just go for speed with, for instance, a RAID 0 that stripes all my data between drives. But

As I considered the options for hard drives, my initial thought was to use the same system but increase the size of the hard drives. There are now hard drives with capacities in excess of 10 tb. They are expensive, but two 10 tb hard drives in a RAID 1 would basically replicate what I was doing but give me plenty of room to grow my photo, video, and file collection. I was just about to pull the trigger on this plan until I realized that another option might make more sense.

RAID 6 requires 4 hard drives, but offers a number of advantages over RAID 1 (e.g., similar speed but better redundancy, as well as the possibility of hot swapping hard drives). And, if I went with RAID 6, I could actually buy cheaper, 4 or 6 tb hard drives instead of 10 tb hard drives and end up with just as much or more space as I would get with two 10 tb hard drives for less money. From the many articles I read on this, it seems that lots of corporations use a RAID 6 given the redundancy and speed advantages that result. I ultimately decided to go with a RAID 6 with four 4 tb hard drives. Effectively, that meant I would be doubling my storage (8 tb) while improving my redundancy.

I still needed to figure out what file system to use. Having worked with Linux for over a decade, I typically use EXT4 on all of my computers. It doesn’t have any file size limitations, obviously, and does everything I need. However, I had been hearing about ZFS for a while (as well as BTRFS) and what I had heard made me think that ZFS was really what I should be running on my NAS. While it may slow down my NAS a little bit, the benefits to preventing bit rot and the redundancy it includes meant the impact on performance would likely be worth it. However, ZFS doesn’t come as a standard file system option in Linux distributions. I have used various disk partitioning programs enough to know what the standard file systems are that ship with Linux distributions and ZFS is not one of those. I was a little worried about what might be entailed in installing ZFS and setting up ZFS in a RAID6 (in ZFS terms, it’s called RAIDZ2). Once I found this handy guide, I realized that it wasn’t that difficult and was something that I could easily do. Before I headed down this path, I tested the guide with a spare drive I had lying around and it really was simple to set up ZFS as the file system on a drive. That test convinced me that ZFS was going to be the route to go for my file system on my drives. Thus, my four 4

Update: 2019-08-18 – I restarted my file server after installing system updates and my ZFS pool was missing. That was terrifying. I finally found a solution that was a little nerve-wracking but worked. Somehow, the mount point where my ZFS pool was supposed to mount either got corrupted or had something in it (for me, it was /ZFSNAS). I renamed that folder:

mv /ZFSNAS /ZFSNAS-temp

I was then able to import my ZFS pool with the following command:

sudo zpool import ZFSNAS

Apparently, this is the result of an upgrade to the ZFS software. This happened again right after I rebooted. There is a new option in ZFS systems that has to be enabled in order for these to mount after reboots:

systemctl enable zfs-import.target

Now, when I reboot, my ZFS pool comes right back up.

Update: 2019-11-13 – I installed an updated and restart and now my ZFS pool has disappeared again. The solution above brought it back, but enabling zfs-import.target, doesn’t bring it back up anymore on the next reboot. I tried enabling all of the following (from here):

sudo systemctl enable zfs.target

sudo systemctl enable zfs-import-cache

sudo systemctl enable zfs-mount

sudo systemctl enable zfs-import.target

None of those worked. Not sure what is going on but I’m pretty sure it’s tied to ZFS changes in the latest Ubuntu/Debian kernel. Argh! I also had to do the following after I brought the pool back up to make sure it was shared across the network:

sudo systemctl restart nfs-kernel-server

NOTE: It also looks like I set up my ZFS pool in a problematic fashion. I created one pool, but no datasets whereas I should have had one pool with multiple datasets. Datasets are where the files should be stored, not in the pool directly. I’m now struggling to set up automated snapshots.

DIY NAS: What software to install?

I have been running Plex for about 6 years to manage my media collection. With my Synology box, the limited RAM and not very good CPU meant that it actually had a pretty hard time managing my media collection. It would transmit videos across my network to my ROKU device, but only if they were in a specific format (mp4, which was probably a limitation of the Synology box as that is not a problem with Plex or ROKU). Setting up a video slide show was basically impossible on my ROKU from my Synology box as the CPU and RAM just couldn’t cut it. While I could play my music across the network, the Synology box would also not play nice with my music in Plex. As a result, I used Plex just to watch videos but not for anything else, even though it is a great way to manage all sorts of media – video, photos, and music. Thus, one of the requirements of my new DIY NAS would be that it has to take advantage of all the features that Plex has to offer (I have a lifetime Plex Pass). So, Plex was the core requirement for my NAS.

But I also wanted my NAS to run Crashplan. As noted above, my Synology box didn’t have enough RAM to run Crashplan, which meant I had to run it on my desktop to backup the files on my NAS to Crashplan. It was a hack to get around the limitations of the Synology NAS (FYI, you need about a gigabyte of RAM for every terabyte of files you want to back up to Crashplan). Plex and Crashplan were minimum software requirements. My DIY NAS had to be able to run both of those and run them well.

I do occasionally download stuff via

The last piece of software I really needed was a way to control my NAS remotely. The goal was to basically have it run headless, stick it in a corner in my office, and just let it do

I also installed SSH so I could access the NAS remotely in case the GUI VNC programs were having issues. I followed this guide.

(Update: 12/7/2019. I strongly encourage the use of tinyMediaManager for managing your videos and TV shows. It’s the slickest software I’ve found for doing this and runs great on Linux.)

DIY NAS: NFS Fileserver

This guide made it very easy.

DIY NAS: Specifications

Here’s the final rundown of what I put together:

OS: Kubuntu 18.04 (long term support)

Hard Drives: ZFS filesystem; four 4 terabyte drives in a RAIDZ2 for 8

CPU: AMD Athlon X4 620

Motherboard:

RAM: 24 GB

Software: Plex, Crashplan, NFS, TeamViewer, kTorrent

UPDATE – 2019-04-24

It’s been almost 4 months running this NAS box. Generally, it’s worked really well. I have, however, run into two problems.

The first one was pretty recent (about 2 weeks ago). I’m not sure what happened, but over the last month, I’m assuming some update to Kubuntu 18.04 led to the screen going black when I would VNC into the box. The server was still working, but I couldn’t get it to display anything via VNC until I restarted the box manually (SSH on the NAS box would have given me another option for that). I’m still not 100% sure what the problem was, but it was somehow related to KDE. I ended up installing Unity/Gnome as the desktop environment and now the problem is gone. That was about a week ago that I solved the problem. The box has been running without a hitch since then.

The only other issue I will note is that there have been two times when I have been streaming shows on my NAS box through ROKU where my box had to actually transcode a 1080p file. With the hardware I have inside it, the 4-core processor was not up to the task. It ended up stopping playback several times and buffering a lot. It can easily stream a 1080p file in almost any format (both Plex and Roku can handle almost every format) and can even manage to stream two of them simultaneously (tested on my TV and phone simultaneously), but the two movies it had issues with were in a weird MKV format that my Roku couldn’t handle. I ended up ripping them into a different MKV format and, voila, problem solved. The point being – my NAS box is a little underpowered. But, a year or two from now, the price of an AMD Threadripper will be half of what it is now. I’ll swap that in and will have all the power I need.

So, 4 months into this, it’s working extremely well. I’d give it a 99 out of 100.

Update 06-21-2020:

As I predicted, after about 1 1/2 years, it was time to upgrade my NAS. I started a new post detailing my new build and what I changed here.

![]()

Leave a Reply